I finally tested new nightly 07 thing and I'm ouraged, why was this added, its completely useless and unfitting.

First of all, 2.5 0.7 servers can't offer anything new or cool, best gamemodes were already ported to ddnet/0.6 trunk.

Secondly, if Chiller is 07 fan, why would he add 07 to ddnet, this change supposes 07 players will use ddnet instead of teeworlds client, and obviously they will stay and play ddnet servers instead, cuz they don't suck. We already have sixup support in case someone wants to play from 07, sixup and ddnet07 are opposite by purpose, this makes no sense.

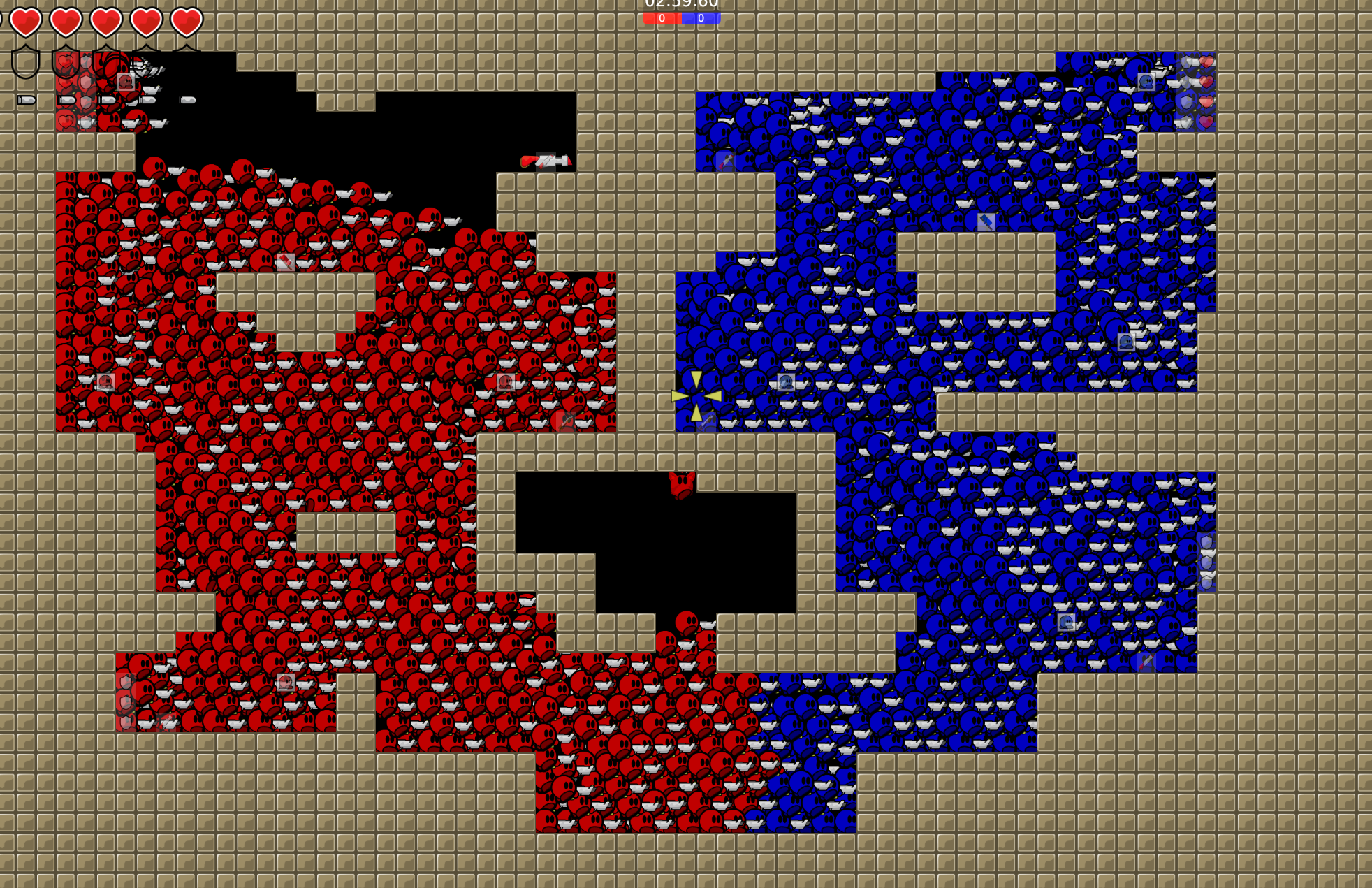

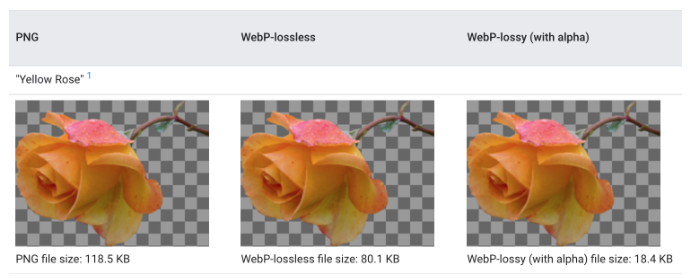

Thirdly, It looks fucking bad, chiller copy-pasted skin and player rendering from 07, I didn't dive into actual code, but I suppose it looks like mess. DDNet already loses its unique style cuz of weird community skins, now this, completely non-fitting 07 skin rendering among 06 gameskins/entities/menu/maps

If this still in nighly, is it possible to be reverted by any chance, I see a lot of negative reviews from old community members, few maintainers and people who lurk on updates time-to-time