DDraceNetwork

Development / developer

Development discussion. Logged to https://ddnet.tw/irclogs/ Connected with DDNet's IRC channel, Matrix room and GitHub repositories — IRC: #ddnet on Quakenet | Matrix: #ddnet-developer:matrix.org GitHub: https://github.com/ddnet

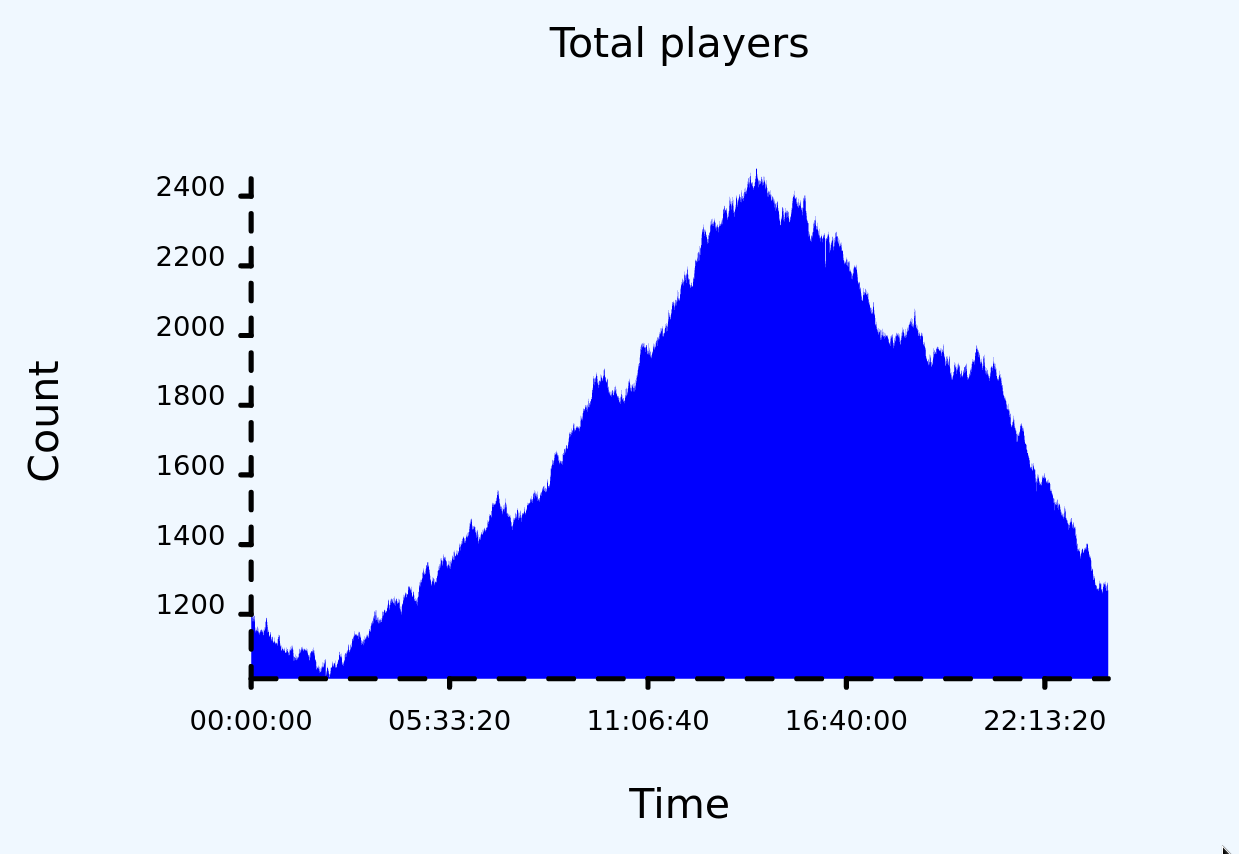

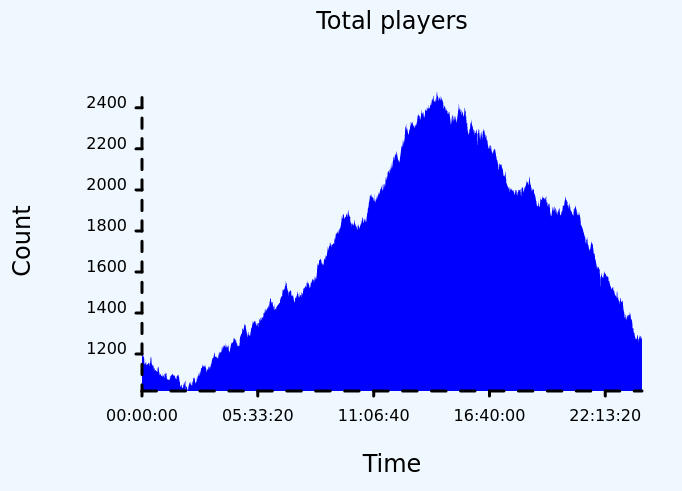

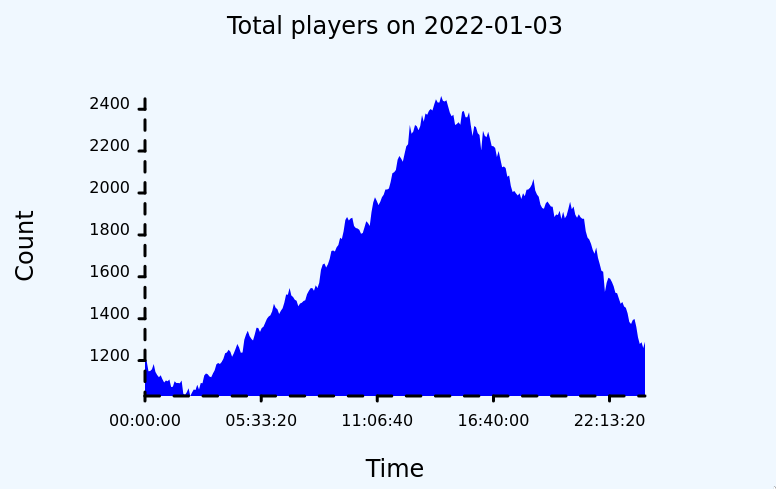

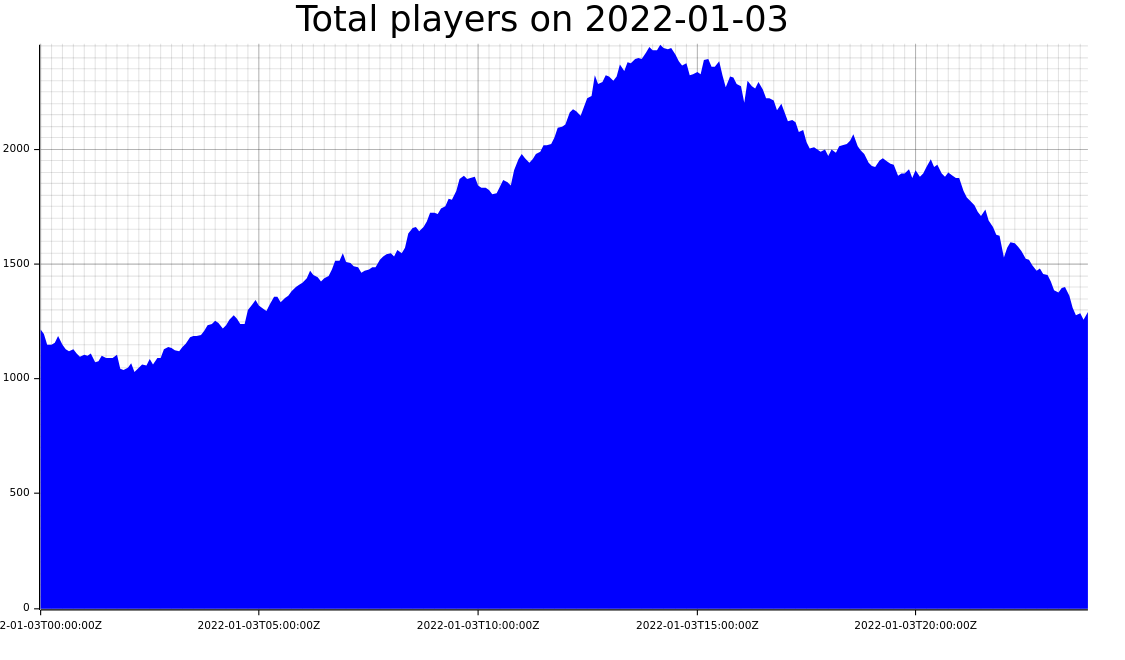

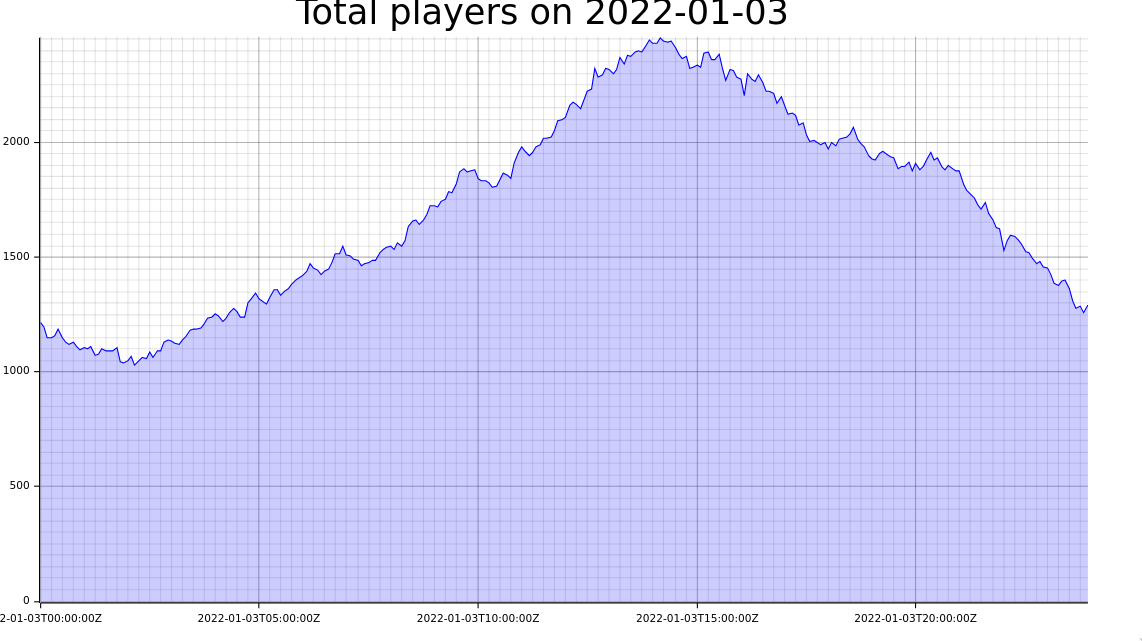

Between 2022-01-05 00:00:00Z and 2022-01-06 00:00:00Z